MTCNN을 사용하면 이미지나 영상데이터의 얼굴 인식을 간단한 코드로 구현할 수 있다.

이전 게시물에서는 나이/성별 예측을 위해 훈련된 CAFFE 모델과 Cascade 등을 살펴보았지만,

단순히 얼굴인식만 위해서는 MTCNN이 보다 사용하기 간편하고, 얼굴인식 성능도 뛰어나다.

이번 코드 구현에서는 영상데이터에서 여러 얼굴을 인식하는 게 주요목적이었기 때문에 기존 코드에 반복문을 추가해보았다.

1. MTCNN 설치 및 import

# 코랩이면 코랩 코드에, 주피터면 주피터 cmd창에 설치

pip install mtcnn

import mtcnn

# 이미지 확인 등을 위해 matplotlib도 설치가 필요하다.

from matplotlib import pyplot2. 이미지 데이터로 MTCNN 얼굴영역 기능 확인하기

# load image from file

pixels = pyplot.imread('/content/sampley.jpeg')

# example of face detection with mtcnn

from matplotlib import pyplot

from PIL import Image

from numpy import asarray

from mtcnn.mtcnn import MTCNN

# extract a single face from a given photograph

def extract_face(filename, required_size=(224, 224)):

# load image from file

pixels = pyplot.imread(filename)

# create the detector, using default weights

detector = MTCNN()

# detect faces in the image

results = detector.detect_faces(pixels)

# extract the bounding box from the first face

x1, y1, width, height = results[0]['box']

x2, y2 = x1 + width, y1 + height

# extract the face

face = pixels[y1:y2, x1:x2]

# resize pixels to the model size

image = Image.fromarray(face)

image = image.resize(required_size)

face_array = asarray(image)

return face_array

# load the photo and extract the face

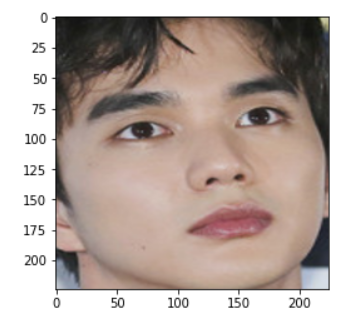

pixels = extract_face('/content/sampley.jpeg')

# plot the extracted face

pyplot.imshow(pixels)

# show the plot

pyplot.show()

3. MTCNN으로 주변 영역 위치값 확보하기

pixels = pyplot.imread('/content/sampley.jpeg')

# create the detector, using default weights

detector = MTCNN()

# detect faces in the image

results = detector.detect_faces(pixels)

print(results)결과는 다음과 같이 출력된다.

[{'box': [104, 70, 143, 179], 'confidence': 0.9999216794967651, 'keypoints': {'left_eye': (148, 145), 'right_eye': (211, 136), 'nose': (195, 167), 'mouth_left': (172, 210), 'mouth_right': (219, 202)}}]

x1, y1, width, height = results[0]['box']

x2, y2 = x1 + width, y1 + height

# extract the face

face2 = pixels[y1-30:y2+30, x1-30:x2+30]

# resize pixels to the model size

image = Image.fromarray(face2)

image = image.resize((254,254))

face_array = asarray(image)

pyplot.imshow(image)

# show the plot

pyplot.show()face2 = pixels[y1-30:y2+30, x1-30:x2+30]

face2는 다음과 같이 픽셀 위치를 조정해서 얼굴영역을 넓혀주었다.

4. MTCNN으로 여러 얼굴 인식하기

(MTCNN은 기본 반환값으로 제일 먼저 인식한 얼굴역역 정보를 반환하지만, for문을 써서 여러 얼굴도 확보가 가능했다.)

pixels = pyplot.imread('/content/PEOPLE.jpeg')

# create the detector, using default weights

detector = MTCNN()

# detect faces in the image

for results in detector.detect_faces(pixels):

print(results)결과는 다음과 같이 나온다.

{'box': [23, 62, 52, 71], 'confidence': 0.9998407363891602, 'keypoints': {'left_eye': (40, 87), 'right_eye': (64, 92), 'nose': (52, 103), 'mouth_left': (37, 113), 'mouth_right': (57, 117)}} {'box': [106, 118, 53, 69], 'confidence': 0.9998244643211365, 'keypoints': {'left_eye': (115, 145), 'right_eye': (138, 142), 'nose': (123, 158), 'mouth_left': (118, 168), 'mouth_right': (143, 165)}} {'box': [146, 15, 40, 50], 'confidence': 0.9995580315589905, 'keypoints': {'left_eye': (160, 32), 'right_eye': (177, 39), 'nose': (165, 47), 'mouth_left': (152, 48), 'mouth_right': (169, 55)}} {'box': [165, 107, 64, 78], 'confidence': 0.9987497329711914, 'keypoints': {'left_eye': (176, 135), 'right_eye': (203, 144), 'nose': (178, 158), 'mouth_left': (168, 161), 'mouth_right': (198, 170)}} {'box': [76, 73, 39, 53], 'confidence': 0.9984415173530579, 'keypoints': {'left_eye': (85, 93), 'right_eye': (104, 95), 'nose': (93, 107), 'mouth_left': (83, 109), 'mouth_right': (104, 111)}} {'box': [100, 21, 45, 58], 'confidence': 0.9919271469116211, 'keypoints': {'left_eye': (112, 43), 'right_eye': (132, 43), 'nose': (122, 57), 'mouth_left': (110, 62), 'mouth_right': (133, 62)}} {'box': [190, 28, 62, 73], 'confidence': 0.970379650592804, 'keypoints': {'left_eye': (205, 52), 'right_eye': (227, 63), 'nose': (203, 70), 'mouth_left': (191, 79), 'mouth_right': (211, 89)}}

결과값에서 box값만 사용하고 싶으면 results['box']로 사용하면 된다.

#결과를 이미지로 확인하고 싶을 때는 다음 코드를 사용하면 된다.

for results in detector.detect_faces(pixels):

x, y, w, h = results['box']

print(results['box'])

cv2.rectangle(pixels, (x,y), (x+w, y+h), (255,255,255), thickness=2)

cv2_imshow(pixels)

5. 영상에서 얼굴인식 하기 (코랩 환경)

#코랩에서는 결과화면으로 동영상이 만들어지지 않아, 다음과 같은 코드가 필요하다.

from google.colab.patches import cv2_imshowdef videoFaceDetector(cam, required_size=(224, 224)): #영상에서 얼굴을 검출하기 위한 함수 정의

while True:

ret, img = cam.read() #영상 캡쳐

try:

img= cv2.resize(img,dsize=None, fx=1.0,fy=1.0) #이미지 크기 조절

except:break

detector=MTCNN() #detector로 MTCNN 사용

for results in detector.detect_faces(img): #얼굴 다중 인식을 위한 반복문

x, y, w, h = results['box'] #얼굴 위치값

cv2.rectangle(img, (x,y), (x+w, y+h), (255,255,255), thickness=2) #이미지에 얼굴영역 상자표시를 위한 코드

cv2_imshow(img)#코랩에서 이미지 파일을 보기(영상은 프레임화되어 표시)

if cv2.waitKey(1) > 0: break # while 루프에서 1msec 동안의 출력을 보여줌cam = cv2.VideoCapture('/content/sample.mp4') #영상업로드

videoFaceDetector(cam) #실행 (코랩은 한 프레임씩 결과가 끊기면서 표시된다.)'인공지능' 카테고리의 다른 글

| [얼굴 분석①] Caffe 딥러닝 프레임워크와 Cascade 오픈CV 알아보기 (0) | 2021.07.31 |

|---|